A common concern our clients have before undertaking a new FIPS 140-2 validation is understanding the various phases and the overall time it takes to get from start to finish.

One of the ways we manage our clients’ expectations is by letting them know the lab testing portion of the FIPS validation usually accounts for only a fraction of the overall schedule. The much longer portion of the process (3x to 5x) is simply waiting for the validation report to be reviewed by the Cryptographic Module Validation Program (CMVP).

For a typical new validation, the longest component of the project is the amount of time a project stays in the FIPS queue. Unfortunately, there are no standard waiting times. The queue is variable depending on the number of reports in process, and it works on a first-come/first-served basis. Labs have no means of prioritizing reports within the queue nor do they know where a report is in the queue at any particular time. The queue is a bit of a black hole — probably by design. (As a recent example of the variables involved, here is a recent bulletin about CMVP resource constraints in reference to the roll out of FIPS 140-3 – https://csrc.nist.gov/projects/fips-140-3-transition-effort.)

The CMVP process includes two primary phases which are captured in two lists: the Implementation Under Test (IUT) list and the Modules in Process (MIP) list.

“The IUT list is provided as a marketing service for vendors… The CMVP does not have detailed information about the specific cryptographic module or when the test report will be submitted to the CMVP for validation. When the lab submits the test report to the CMVP, the module will transition from the IUT list to the MIP list.”

https://csrc.nist.gov/Projects/Cryptographic-Module-Validation-Program/Modules-In-Process/IUT-List

“The MIP list contains cryptographic modules on which the CMVP is actively working. … The validation process is a joint effort between the CMVP, the laboratory and the vendor and therefore, for any given module, the action to respond could reside with the CMVP, the lab or the vendor. This list does not provide granularity into which entity has the action.”

https://csrc.nist.gov/Projects/Cryptographic-Module-Validation-Program/Modules-In-Process/Modules-In-Process-List

Lightship has been collecting data regarding IUT to MIP average total times across all labs for some time now. We thought it would be interesting and/or useful for our client base to understand these timelines when planning a new validation.

The Modules in Process list has several points of interest which represent which entity (or groups of entities) are working on the report:

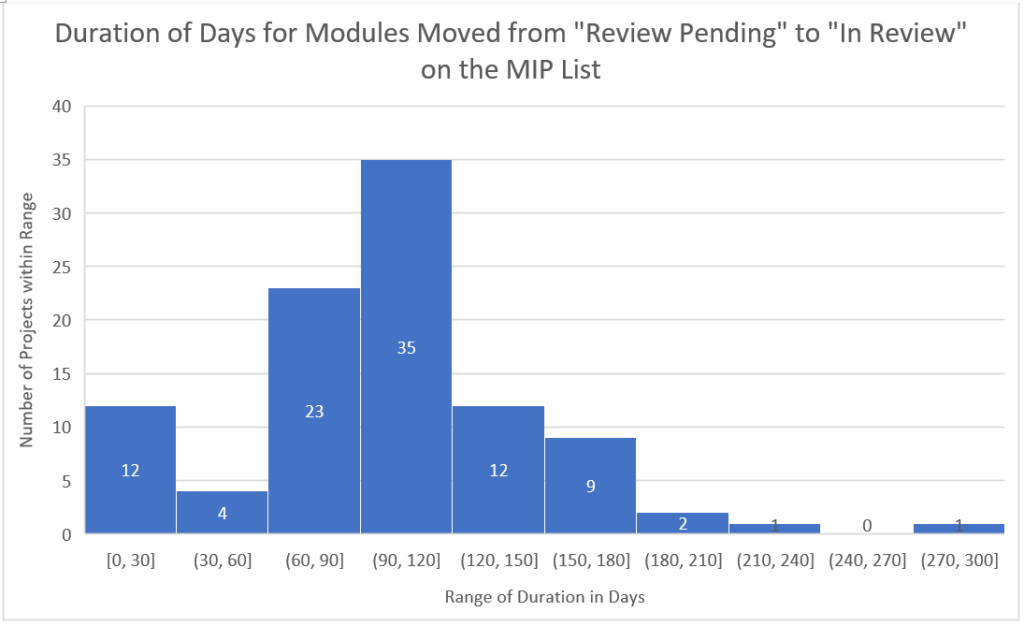

- Review Pending: the waiting queue after which a report has been submitted by a lab to the CMVP and is waiting for a reviewer to pick it up.

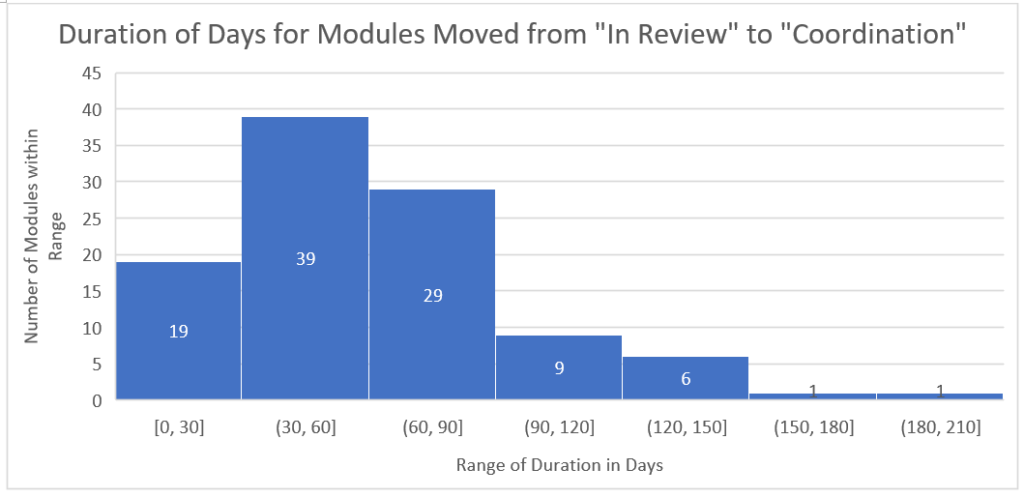

- In Review: the point at which the CMVP reviewer has picked up the report and is actively working on it.

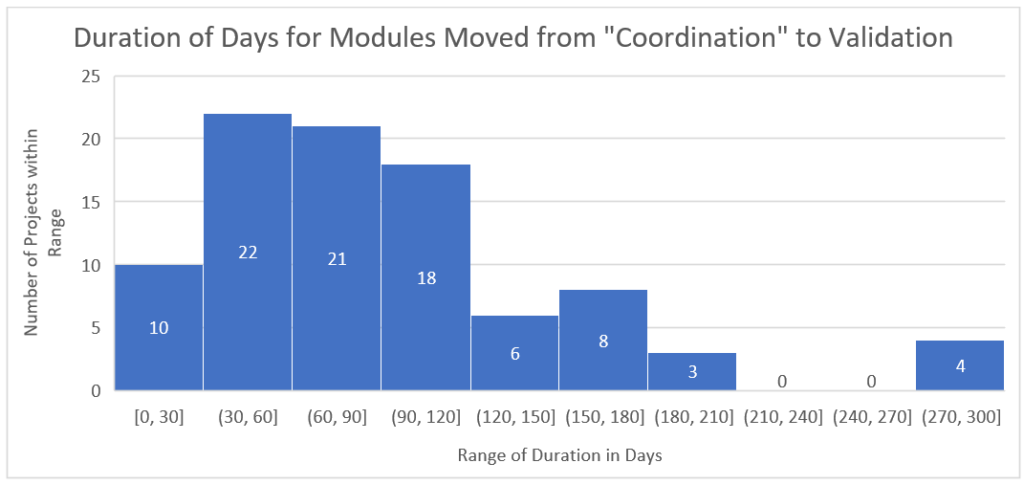

- Coordination: the phase where the CMVP, the lab and the vendor iterate to actively address comments from the review.

While we have data spanning more than 18 months, data reflected here is from CMVP data between October 1, 2019 and June 30, 2020 and has been subjected to cleaning and filtering*. In total, 147 modules are represented within the data set.

- Average time from “Review Pending” to “In Review”: 103 days

- Longest queue time recorded (From Review Pending to In-Review): 271 days

- Average “In Review” time: 60 days

- Shortest time (From “Review Pending” to “In Review”) recorded: 7 days

- Average time for “Coordination” phase: 79 days

- Breakdown by module type: 72 Hardware modules, 67 Software modules, 6 Software-hybrid modules, 2 firmware module = 147 total validated

(Not all of the modules we have data for include movement within the MIP list and this is reflected in the graphs below.)

Hopefully, this information will arm you with the required information you need for your organization to set realistic expectations around typical timelines for a full validation process.

Having your module listed on the MIP list is typically sufficient to engage in procurement activities with federal agencies as they understand that the queue length is beyond a vendor’s control. As of this writing, vendors should expect approximately a 9 month queue time.

*The statistics are gathered from publicly available information. While we have attempted to be as accurate as possible, cleaning the data does present some challenges due to how it is presented by NIST.

Our test experts are here to help! Contact Lightship Security today to help with your FIPS 140-2 and FIPS 140-3 needs!

Jason has been involved in the leadership of different cyber security companies, including being responsible for the accreditation, management and profitable growth of several government-accredited IT security laboratories. Jason drives the Lightship vision of modernizing the product certification landscape.